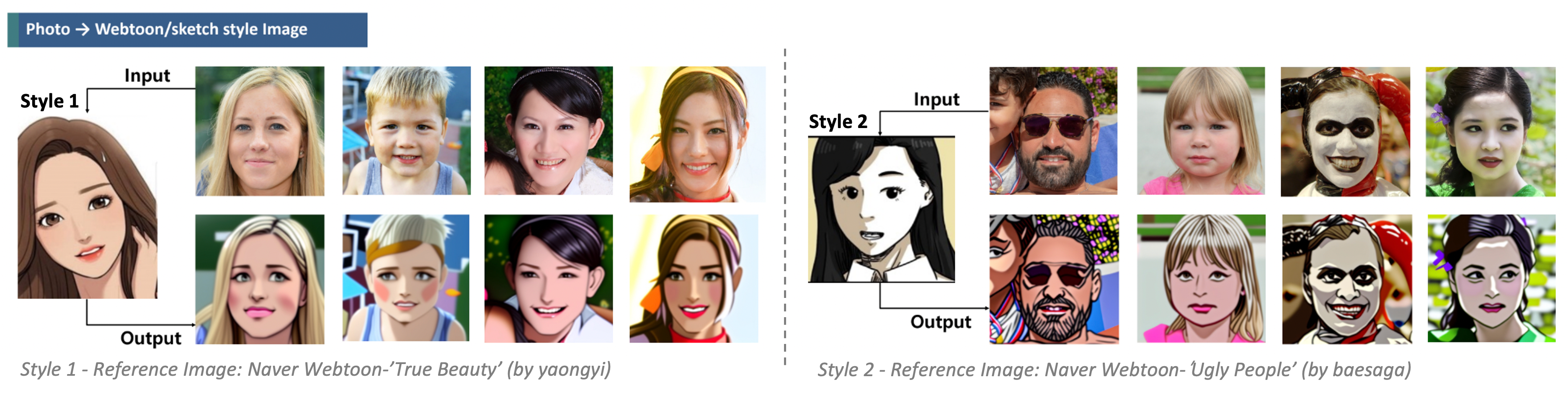

Preview of the Demo

Image-to-Image Translation 데모 영상

Text-to-Image Generation 안내 영상

Text-to-Image Generation 데모 영상

View Project & Code

Getting Started

We recommend running our code using:

- NVIDIA GPU + CUDA, CuDNN

- Python 3, Anaconda

1. Installation

Clone the repositories.

git clone https://github.com/ssoojeong/Webtoon_InST.git

git clone https://github.com/zyxElsa/InST.git

Run following commands to install necessary packages.

conda env create -f environment.yaml

conda activate ldm

2. Pretrained Models for Webtoon_InST Inference

Download the pretrained models and save it to the indicated location.

| Pretrained Model | Save Location | Reference Repo/Source |

|---|---|---|

| Stable Diffusion | ./InST/models/sd/sd-v1-4.ckpt | CompVis/stable-diffusion |

| YeosinGangrim | ./InST/logs/yeosin/ | 여신강림-네이버웹툰 |

| UglyPeoples | ./InST/logs/ugly/ | 어글리피플즈-네이버웹툰 |

| YumiSepo | ./InST/logs/yumi/ | 유미의세포-네이버웹툰 |

| Other style | ./InST/logs/etc/ | An Image in the InST (CVPR, 2023) paper |

3. Implementation

Run following commands and open the shared link.

python demo_canny.py

- The Gradio app allows you to change hyperparameters(steps, style guindace sclae, etc.)

- The FFHQ sample datasets has been uploaded in the

./data/face, so you can use it for testing.

4. Results

- After translating an image with the gradio app, you can check the generated foler,

./demo_output. - Inside this folder, you’ll find subfolders like

./demo_output/yeosin,./demo_output/ugly,./demo_output/love,./demo_output/etc, each containing images transformed into their respective webtoon styles.

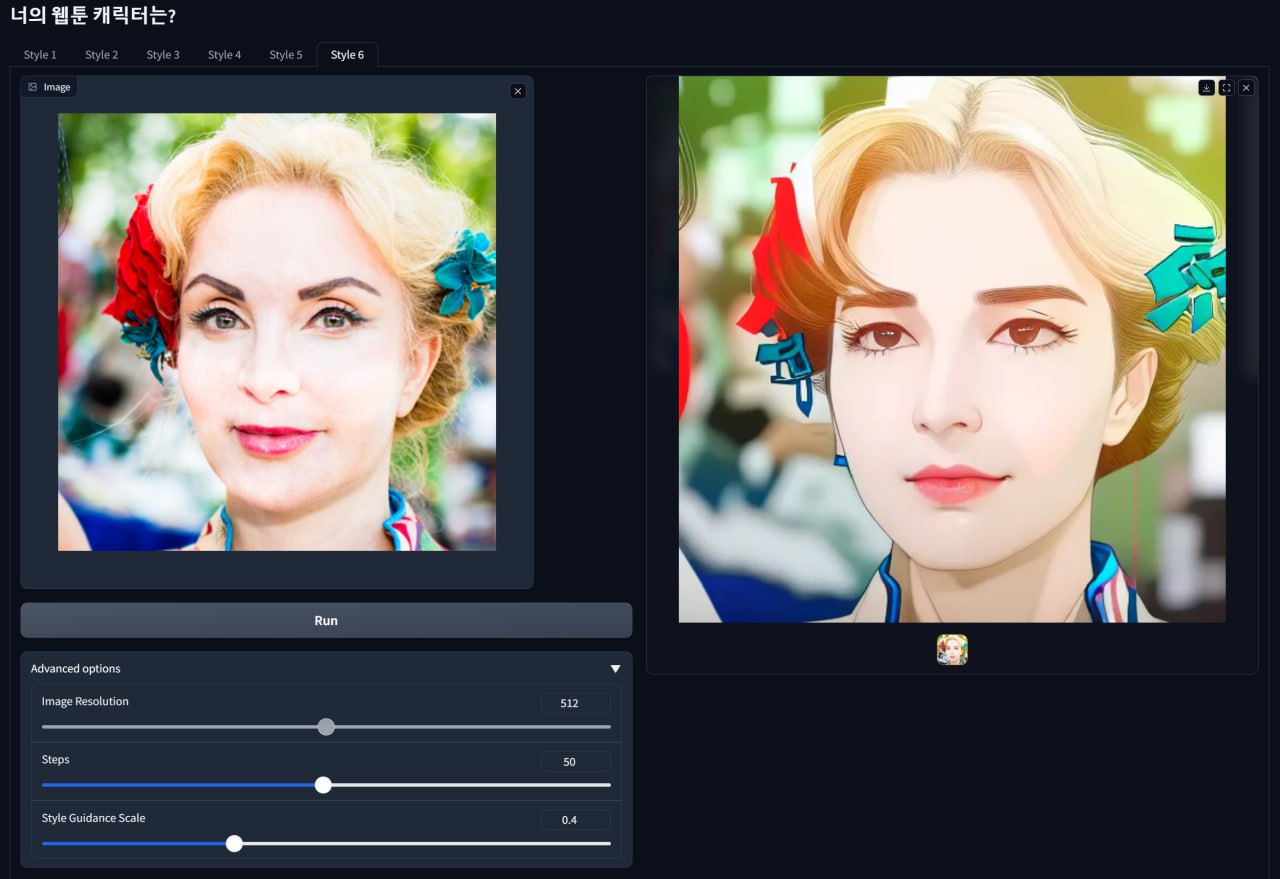

🎨 Image Samples

cf. Different style guidance scales for background and foreground

If you want to give different style guidance to the background and foreground, clone the repository below and use it.

git clone https://github.com/xuebinqin/DIS.git

The Implementation code is already in this inference python file, but the detailed implementation method will be updated later.

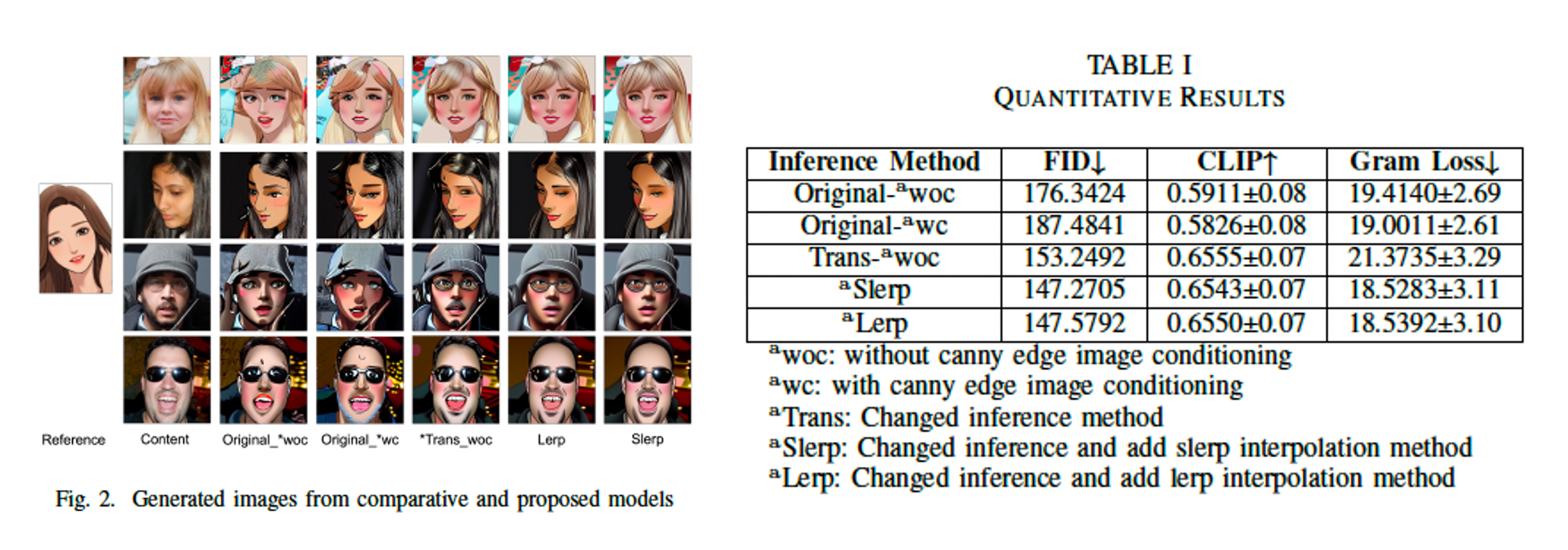

Additional Experiments and Reports

Preview of Report

- In our experimentation with this proposed method, using Naver webtoon images as style images and FFHQ dataset images as content, we evaluated the performance of style transfer on human faces.